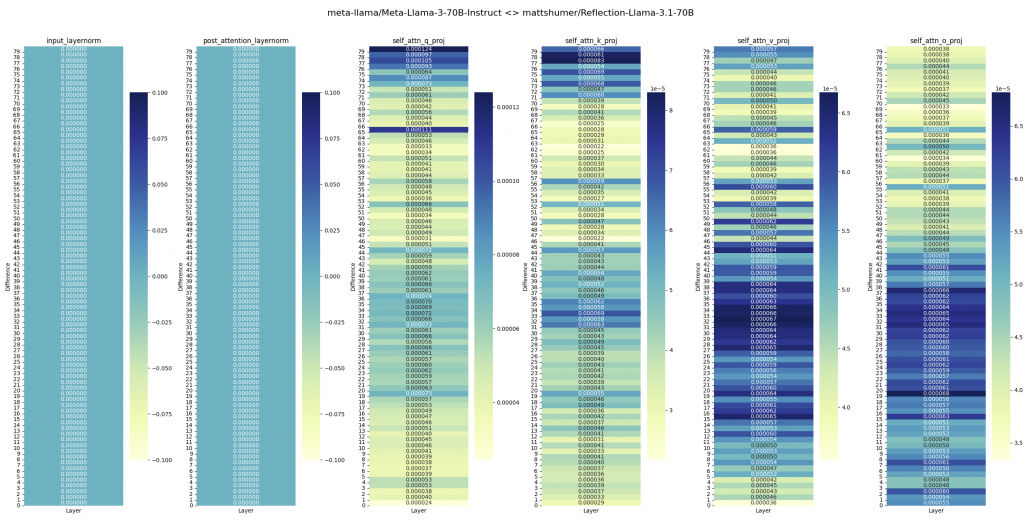

在测量差异后,这个模型似乎是应用了 LoRA 调优的 Llama 3。不是 Llama 3.1。

参考链接:https://huggingface.co/mattshumer/Reflection-Llama-3.1-70B/discussions/38

https://gist.github.com/StableFluffy/1c6f8be84cbe9499de2f9b63d7105ff0

https://www.reddit.com/r/LocalLLaMA/comments/1fb6jdy/reflectionllama3170b_is_actually_llama3

笔者在windows 下的测试代码,无需 GPU 环境:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

from transformers import AutoModelForCausalLM, AutoTokenizer import torch import matplotlib.pyplot as plt import seaborn as sns import os os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE" torch.set_num_threads(1) base_model_name = "meta-llama/Meta-Llama-3-70B-Instruct" chat_model_name = "mattshumer/Reflection-Llama-3.1-70B" base_model = AutoModelForCausalLM.from_pretrained(base_model_name, torch_dtype=torch.bfloat16) chat_model = AutoModelForCausalLM.from_pretrained(chat_model_name, torch_dtype=torch.bfloat16) def calculate_weight_diff(base_weight, chat_weight): return torch.abs(base_weight - chat_weight).mean().item() def calculate_layer_diffs(base_model, chat_model): layer_diffs = [] for base_layer, chat_layer in zip(base_model.model.layers, chat_model.model.layers): layer_diff = { 'input_layernorm': calculate_weight_diff(base_layer.input_layernorm.weight, chat_layer.input_layernorm.weight), # 'mlp_down_proj': calculate_weight_diff(base_layer.mlp.down_proj.weight, chat_layer.mlp.down_proj.weight), # 'mlp_gate_proj': calculate_weight_diff(base_layer.mlp.gate_proj.weight, chat_layer.mlp.gate_proj.weight), # 'mlp_up_proj': calculate_weight_diff(base_layer.mlp.up_proj.weight, chat_layer.mlp.up_proj.weight), 'post_attention_layernorm': calculate_weight_diff(base_layer.post_attention_layernorm.weight, chat_layer.post_attention_layernorm.weight), 'self_attn_q_proj': calculate_weight_diff(base_layer.self_attn.q_proj.weight, chat_layer.self_attn.q_proj.weight), 'self_attn_k_proj': calculate_weight_diff(base_layer.self_attn.k_proj.weight, chat_layer.self_attn.k_proj.weight), 'self_attn_v_proj': calculate_weight_diff(base_layer.self_attn.v_proj.weight, chat_layer.self_attn.v_proj.weight), 'self_attn_o_proj': calculate_weight_diff(base_layer.self_attn.o_proj.weight, chat_layer.self_attn.o_proj.weight) } layer_diffs.append(layer_diff) return layer_diffs def visualize_layer_diffs(layer_diffs): num_layers = len(layer_diffs) num_components = len(layer_diffs[0]) fig, axs = plt.subplots(1, num_components, figsize=(24, 8)) fig.suptitle(f"{base_model_name} <> {chat_model_name}", fontsize=16) for i, component in enumerate(layer_diffs[0].keys()): component_diffs = [[layer_diff[component]] for layer_diff in layer_diffs] sns.heatmap(component_diffs, annot=True, fmt=".6f", cmap="YlGnBu", ax=axs[i], cbar_kws={"shrink": 0.8}) axs[i].set_title(component) axs[i].set_xlabel("Layer") axs[i].set_ylabel("Difference") axs[i].set_xticks([]) axs[i].set_yticks(range(num_layers)) axs[i].set_yticklabels(range(num_layers)) axs[i].invert_yaxis() plt.tight_layout() plt.show() layer_diffs = calculate_layer_diffs(base_model, chat_model) visualize_layer_diffs(layer_diffs) |

笔者的测试结果: