1. 什么是嵌入?

OpenAI的文本嵌入衡量文本字符串的相关性。嵌入通常用于:

- 搜索(Search) 其中结果按与查询字符串的相关性排名

- 聚类分析(Clustering) 其中文本字符串按相似性分组

- 建议(Recommendations) 建议使用具有相关文本字符串的项目

- 异常检测(Anomaly detection) 识别相关性不大的异常值

- 多样性测量(Diversity measurement) 分析相似性分布

- 分类(Classification) 其中文本字符串按其最相似的标签分类

嵌入是浮点数的向量(列表)。两个向量之间的距离衡量它们的相关性。小距离表示高相关性,大距离表示低相关性。

请访问OpenAI的定价页面,了解嵌入定价。请求根据输入中的令牌数量计费。

2. 如何获取嵌入

要获取嵌入,请将文本字符串与嵌入模型名称(例如 text-embedding-3-small )一起发送到嵌入 API 端点(例如 )。响应将包含一个嵌入(浮点数列表),您可以将其提取、保存在矢量数据库中,并用于许多不同的用例:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# pip install openai import os from openai import OpenAI OPENAI_API_KEY = os.getenv('OPENAI_API_KEY') client = OpenAI( api_key=OPENAI_API_KEY ) response = client.embeddings.create( input="What did the author do growing up?", model="text-embedding-3-small" ) print(response) |

下面是输出结果,总结1536个数据:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 |

CreateEmbeddingResponse(data=[Embedding(embedding= [ 0.010480836033821106, -0.014171650633215904, 0.03369464352726936, 0.051227692514657974, 0.010406885296106339, -0.05329831317067146, 0.0372442789375782, 0.04006785526871681, -0.0686262845993042, 0.0014496025396510959, -0.017586829140782356, -0.007435410283505917, -0.013075835071504116, 0.04649484530091286, 0.0025210478343069553, 0.014292661100625992, 0.035738375037908554, -0.0031950080301612616, -0.034743402153253555, -0.022911282256245613, 0.04254184290766716, 0.008706018328666687, -0.0020807047840207815, 0.007603480480611324, 0.020410403609275818, -0.01528763398528099, 0.011549760587513447, 0.023798691108822823, 0.005946311634033918, 0.002961390884593129, -0.08260969817638397, -0.02187597192823887, -0.022010428830981255, -0.012833814136683941, 0.05238401144742966, -0.033264387398958206, 0.03450138121843338, -0.03587283194065094, 0.07459612190723419, 0.02861221320927143, -0.01792296953499317, -0.04864614084362984, -0.04939909279346466, 0.03861572965979576, -0.03130133077502251, 3.4375538234598935e-05, 0.01131446287035942, 0.042595624923706055, 0.02471299096941948, -0.009129554964601994, -0.038561947643756866, -0.032968584448099136, 0.015166623517870903, 0.009055604226887226, -0.04041744023561478, 0.008887534029781818, -0.06824980676174164, -0.048753704875707626, -0.0056505086831748486, 0.0007974914624355733, 0.027778586372733116, -0.027993716299533844, -0.04305277392268181, -0.0010504366364330053, 0.002873994642868638, -0.005912697408348322, -0.05351344123482704, -0.012195148505270481, -0.013149785809218884, 0.025143250823020935, 0.01058840099722147, 0.010440499521791935, -0.015906130895018578, -0.016699420288205147, -0.020370066165924072, -0.043483033776283264, -0.019079290330410004, -0.05453530699014664, -0.020638978108763695, 0.011146392673254013, 0.03686780482530594, 0.0033160182647407055, -0.0568479485809803, 0.056901730597019196, 0.025828976184129715, 0.01318340003490448, 0.04929152876138687, 0.006645482033491135, -0.02410794049501419, -0.05824628844857216, 0.0006546321092173457, 0.0024118025321513414, 0.025519726797938347, -0.008463998325169086, 0.06744307279586792, -0.037405628710985184, 0.0017311195842921734, 0.028665995225310326, 0.013143062591552734, 0.009297624230384827, 0.005216888152062893, -0.017398592084646225, 0.019832242280244827, -0.0033311445731669664, -0.010373272001743317, 0.03283412754535675, -0.02292472869157791, -0.025022240355610847, -0.01657840982079506, -0.014924603514373302, -0.13789795339107513, -0.00990267563611269, -0.011556483805179596, 0.0039462801069021225, -0.062495097517967224, 0.012242208234965801, -0.026837395504117012, -0.035039205104112625, -0.04168132320046425, 0.008827028796076775, -0.03361397236585617, -0.012067415751516819, 0.006480773910880089, -0.04816209897398949, 0.05674038454890251, 0.021593615412712097, -0.019052399322390556, -0.019549885764718056, -0.034366924315690994, -0.02117680199444294, 0.025546617805957794, 0.013203567825257778, 0.0030622328631579876, -0.011664047837257385, -0.005105962045490742, -0.04843100905418396, 0.018891051411628723, -0.005653869826346636, 0.004716040100902319, 0.012336327694356441, -0.017667504027485847, 0.01544898096472025, 0.013929629698395729, -0.005569835193455219, 0.02529115229845047, 0.02560039982199669, 0.07685498148202896, 0.059268154203891754, 0.024968458339571953, 0.014601909555494785, 0.022803718224167824, 0.038669511675834656, -0.021445713937282562, 0.01386240217834711, 0.000705053040292114, 0.015072504989802837, 0.042488060891628265, -0.02295161969959736, -0.01068252045661211, 0.047489818185567856, 0.011011936701834202, -0.0017344809602946043, -0.023462552577257156, -0.018581803888082504, -0.07126162201166153, -0.006773215252906084, 0.01949610374867916, -0.02863910421729088, 0.0052975621074438095, -0.014306106604635715, -0.007388351019471884, -0.002801724476739764, 0.04566121846437454, 0.03737873584032059, 0.020262502133846283, -0.020665869116783142, -0.022588588297367096, -0.019549885764718056, -0.03479718416929245, 0.01823221892118454, -0.03576526418328285, 0.047301579266786575, -0.07126162201166153, -0.036814022809267044, -0.04383261874318123, 0.010864035226404667, -0.009620318189263344, -0.032000500708818436, 0.030118118971586227, 0.004094181582331657, -0.008894257247447968, 0.023381877690553665, -0.012343049980700016, -0.0392342284321785, 0.0391804464161396, -0.042111583054065704, 0.07045488059520721, 0.0034689619205892086, -0.05695551261305809, -0.013741391710937023, 0.06518421322107315, 0.00286895246244967, 0.018689367920160294, -0.01872970536351204, 0.010158142074942589, -0.027859259396791458, 0.018595248460769653, 0.04781251400709152, 0.0037983788643032312, -0.004927808418869972, 0.05031339079141617, -0.02323397621512413, 0.0033529936335980892, 0.02831641025841236, -0.008410215377807617, 0.02194320037961006, 0.03423246741294861, -0.010501004755496979, -0.026541592553257942, 0.02028939314186573, 0.020033927634358406, 0.03850816562771797, 0.005502607207745314, 0.033371951431035995, 0.03038703091442585, -0.03762075677514076, -0.02625923417508602, -0.03611484915018082, -0.024686099961400032, -0.04576878249645233, 0.042219147086143494, 0.04625282436609268, -0.02382558211684227, 0.00023697849246673286, -0.018891051411628723, 0.008699296042323112, -0.030118118971586227, 0.03420557826757431, -0.04036365821957588, 0.027402110397815704, -0.03463583439588547, 0.014306106604635715, 0.004379900638014078, 0.024928120896220207, -0.02225244976580143, 0.044451117515563965, -0.027213871479034424, -0.04743603616952896, 0.003684091381728649, -0.016430508345365524, -0.016390172764658928, 0.025721410289406776, 0.02137848548591137, 0.02500879392027855, -0.042299821972846985, 0.035550136119127274, 0.009586704894900322, -0.030413921922445297, 0.049856241792440414, -0.02979542501270771, 0.04321411997079849, -0.00595303438603878, 0.010642183013260365, -0.034743402153253555, 0.005606810562312603, 0.034178685396909714, -0.011583374813199043, 0.035442572087049484, -0.0007319442229345441, -0.022104548290371895, -0.053674791008234024, -0.021391931921243668, 0.02136504091322422, 0.004729485604912043, -0.01636328175663948, -0.03928801044821739, 0.020638978108763695, 0.010427054017782211, -0.014776702038943768, 0.01636328175663948, -0.007536252494901419, 0.05216888338327408, 0.01569100096821785, 0.029956771060824394, -0.01265229843556881, 0.02156672440469265, 0.020504523068666458, 0.027886150404810905, 0.013808619230985641, -0.029526513069868088, 0.016645638272166252, -0.025560064241290092, 0.03990650549530983, -0.03479718416929245, 0.02488778531551361, -0.013781728222966194, -0.015744784846901894, 0.0066353981383144855, -0.01735825464129448, 0.029096253216266632, 0.06039758399128914, 0.03175847977399826, 0.03420557826757431, 0.05214199423789978, 0.030064336955547333, 0.021539833396673203, 0.033775318413972855, 0.04477380961179733, 0.018097762018442154, 0.004453851375728846, 0.003916027490049601, 0.04146619513630867, -0.022386904805898666, -0.010117805562913418, 0.011186730116605759, -0.024470971897244453, -0.024349961429834366, -0.019536439329385757, 0.02410794049501419, -0.05421261489391327, 0.018205326050519943, -0.009129554964601994, -0.01860869489610195, -0.023556672036647797, -0.025546617805957794, -0.009304347448050976, 0.014870820567011833, 0.0392342284321785, -0.006648843642324209, -0.0029126505833119154, -0.01337836030870676, -0.05598743259906769, -0.032296303659677505, 0.015193515457212925, 0.011408582329750061, -0.03146267682313919, -0.004638728220015764, 0.0539168119430542, 0.0070723798125982285, -0.01616159826517105, -0.03977205231785774, -0.03498542308807373, -0.0021798659581691027, -0.0010285875760018826, 0.002588275820016861, -0.010050577111542225, 0.011650602333247662, -0.0002556762774474919, -0.025949986651539803, 0.03961070254445076, 0.006420268677175045, 0.023354986682534218, 0.018783487379550934, -0.0016983458772301674, -0.025371825322508812, -0.002534493338316679, -0.00015094774425961077, 0.016941441223025322, 0.02616511471569538, 0.031220655888319016, -0.027993716299533844, -0.025707965716719627, 0.049533549696207047, 0.031812261790037155, -0.036706455051898956, 0.023368433117866516, -0.025049131363630295, 0.025076022371649742, -0.01206069253385067, -0.030817288905382156, -0.022817164659500122, -0.0016370003577321768, -0.00707910256460309, 0.014386779628694057, -0.017129680141806602, 0.04065946117043495, -0.0005369831924326718, -0.049748677760362625, -0.0342862494289875, 0.03654510900378227, 0.018097762018442154, 0.08029705286026001, 0.0027277737390249968, -0.06432369351387024, 0.03194671869277954, -0.01317667681723833, 0.016403617337346077, -0.006897586863487959, -0.016228824853897095, 0.0259903222322464, -0.023785246536135674, -0.003697536885738373, 0.022575143724679947, 0.0519268624484539, -0.028854232281446457, -0.042004019021987915, 0.04060567915439606, 0.025116359815001488, 0.012558179907500744, 0.004638728220015764, 0.0308979619294405, 0.012551456689834595, -0.00897493027150631, 0.025640737265348434, 0.07470369338989258, 0.02147260494530201, 0.018312891945242882, -0.018702814355492592, -0.010312766768038273, 0.007697599474340677, -0.04057878628373146, -0.013875847682356834, 0.0470326691865921, -0.05345965921878815, 0.02117680199444294, -0.03568459302186966, 0.0070185973308980465, -0.010729579254984856, 0.0392342284321785, 0.02607099711894989, -0.09374264627695084, -0.03165091574192047, 0.020464185625314713, -0.03751319274306297, 0.0176406130194664, 0.011153115890920162, 0.004544608760625124, -0.021996982395648956, 0.021647397428750992, 0.03380221128463745, 0.006779938004910946, -0.0010100998915731907, 0.007442133501172066, -0.04342925176024437, 0.005774880293756723, -0.028961798176169395, -0.007549697998911142, 0.0755104273557663, -0.006154718343168497, -0.022696154192090034, 0.012813646346330643, 0.024444080889225006, -0.04439733177423477, 0.014964940026402473, -0.030252574011683464, -0.02861221320927143, 0.027092861011624336, 0.003552996786311269, -0.031328219920396805, 0.011233788914978504, 0.001621033763512969, -0.07142296433448792, -0.0013975008623674512, -0.008484166115522385, 0.0016201934777200222, 0.032699670642614365, 0.029876098036766052, -0.004282420035451651, -0.022776827216148376, 0.011785058304667473, -0.05136214941740036, 0.021929755806922913, -0.0018504491308704019, -0.04496204853057861, 0.01851457543671131, 0.010232092812657356, 0.023960039019584656, -0.014050640165805817, 0.01245061494410038, -0.06045136600732803, -8.970728958956897e-05, 0.023072630167007446, -0.026205452159047127, 0.0259903222322464, -0.042219147086143494, 0.016148151829838753, -0.02853153832256794, -0.027496227994561195, -0.017371701076626778, -0.06039758399128914, 0.0411703921854496, 0.046602409332990646, 0.07803819328546524, -0.030924852937459946, -0.013741391710937023, -0.02088099904358387, -0.07174566388130188, 0.033667754381895065, 0.030924852937459946, 0.03861572965979576, -0.05561095476150513, 0.008685850538313389, 0.0066757346503436565, -0.015596882440149784, 0.025049131363630295, 0.00910938624292612, 0.011334631592035294, -0.04792007803916931, -0.011106056161224842, 0.022212112322449684, -0.008322819136083126, 0.04525785148143768, -0.062495097517967224, 0.06830359250307083, -0.025237370282411575, -0.04275697097182274, -0.01999359019100666, 0.012484229169785976, 0.018971726298332214, -0.03517365828156471, -0.004178216680884361, -0.033963557332754135, -0.020638978108763695, 0.0067059872671961784, 0.008867365308105946, 0.03576526418328285, 0.009432080201804638, 0.03657200187444687, 0.002747942227870226, 0.009983349591493607, 0.005576557945460081, 0.014171650633215904, -0.03401733934879303, 0.03899220749735832, 0.022037319839000702, -0.03076350688934326, -0.014897712506353855, 0.06314048171043396, -0.017909523099660873, -0.00629589706659317, 0.022198665887117386, 0.00940518919378519, 0.009647210128605366, -0.005374873988330364, -0.016309499740600586, 0.03312993049621582, 0.016645638272166252, -0.009210227988660336, 0.0027765140403062105, -0.02753656543791294, -0.010312766768038273, 0.04146619513630867, 0.06098918989300728, 0.03735184669494629, 0.004346286412328482, 0.0156775563955307, -0.020316284149885178, -0.006779938004910946, -0.018891051411628723, -0.022870946675539017, 0.01746581867337227, -0.013916184194386005, 0.008006848394870758, -0.01636328175663948, 0.026151670143008232, 0.0225482527166605, -0.011193452402949333, 0.016793539747595787, 0.0006361444247886539, -0.011119501665234566, 0.006500942166894674, -0.025264261290431023, 0.008853919804096222, 0.008437106385827065, 0.014830484054982662, -0.0019832244142889977, -0.006477412302047014, -0.024860892444849014, 0.0019899471662938595, 0.006648843642324209, 0.010763193480670452, 0.01215481199324131, 0.015664109960198402, -0.04138552024960518, -0.03216184675693512, -0.01586579531431198, -0.006373208947479725, 0.022427242249250412, -0.030252574011683464, -0.018985170871019363, -0.006161441095173359, 0.023839028552174568, -0.008611899800598621, -0.042703188955783844, -0.03157024085521698, 0.023758355528116226, 0.05483110994100571, -0.00573790492489934, 0.012538011185824871, 0.0066118682734668255, -0.023771800100803375, 0.03253832459449768, -0.041116610169410706, 0.03584593906998634, 0.00127480982337147, 0.010897649452090263, -0.005196719896048307, -0.01950954832136631, -0.04235360398888588, -0.02979542501270771, 0.06136566773056984, 0.00799340195953846, -0.01844734698534012, 0.03060215897858143, 0.021432267501950264, 0.003131141420453787, -0.034366924315690994, -0.0333450585603714, 0.00045336844050325453, 0.0022588588763028383, -0.02636680006980896, 0.022763380780816078, -0.01920030079782009, -0.0028134894091635942, 0.0032202184665948153, 0.0023630622308701277, 0.025842420756816864, -0.020800326019525528, -0.00940518919378519, 0.018756596371531487, 0.00590597465634346, -0.023986930027604103, -0.034743402153253555, 0.05466976389288902, -0.00045420878450386226, -0.04057878628373146, -0.0011336312163621187, 0.03598039597272873, 0.01715657114982605, 0.04006785526871681, 0.0064404369331896305, 0.02361045405268669, -0.029580295085906982, 0.02804749831557274, 0.006732878275215626, -0.015462426468729973, 0.04762427508831024, -0.012363218702375889, -0.032188739627599716, -0.018272554501891136, 0.004500910639762878, -0.0016647318843752146, 0.009297624230384827, 0.03684091195464134, -0.011354799382388592, 0.03608796000480652, -0.01872970536351204, -0.0030387029983103275, 0.005835385527461767, -0.00941191241145134, -0.013169953599572182, -0.014964940026402473, 0.005949672777205706, -0.020706206560134888, 0.014830484054982662, -0.01636328175663948, 0.03606106713414192, -0.04203090816736221, 0.013687608763575554, 0.0015563268680125475, 0.008262313902378082, -0.0033429095055907965, -0.0191330723464489, 0.0032941692043095827, 0.022427242249250412, 0.010534618981182575, 0.036625783890485764, -0.007260617800056934, 0.002147932769730687, -0.0181380994617939, 0.02547939121723175, 0.029929880052804947, 0.004221914801746607, 0.00011659846495604143, 0.01569100096821785, 0.0024084411561489105, 0.0064942194148898125, -6.18707126704976e-05, 0.022091101855039597, -0.01569100096821785, 0.029822316020727158, 0.006463966798037291, -0.003105930984020233, -0.02214488387107849, 0.005949672777205706, 0.01929442025721073, 0.0035866107791662216, -0.010951431468129158, -0.004692510236054659, -0.026003768667578697, -0.04654862731695175, -0.029929880052804947, 0.03791655972599983, -0.012974993325769901, 0.032672781497240067, 0.009264010936021805, -0.03643754497170448, 0.038669511675834656, -0.0066757346503436565, -0.09487207233905792, -0.0022588588763028383, -0.04332168772816658, 0.002744580851867795, 0.012679190374910831, -0.01952299475669861, -0.028961798176169395, 0.017909523099660873, -0.005630340427160263, -0.002721050987020135, -0.01854146644473076, 0.001545402337796986, -0.010958154685795307, 0.01558343693614006, 0.03030635602772236, -0.01087748073041439, -0.0019731400534510612, -0.0026202090084552765, -0.008235422894358635, -0.001558007556013763, 0.029096253216266632, 0.024538198485970497, -0.003082401119172573, -0.00852450355887413, -0.02285750024020672, -0.012127920985221863, -0.007852223701775074, 0.008712741546332836, 0.018353227525949478, -0.06539934128522873, 0.05405126512050629, 0.022104548290371895, 0.011711107566952705, -0.003006769809871912, -0.010729579254984856, -0.007287508808076382, 0.01971123367547989, -0.03511987626552582, 0.03657200187444687, 0.031812261790037155, 0.01626916229724884, 0.004527802113443613, -0.00793289765715599, -0.016027141362428665, 0.023973483592271805, 0.037486299872398376, -0.016995223239064217, 0.005569835193455219, 0.009425357915461063, -0.028477756306529045, -0.010998491197824478, -0.01890449784696102, 0.011724553070962429, -0.0107900844886899, 0.017801959067583084, -0.009089217521250248, 0.0025076023302972317, -0.034850966185331345, 0.005583280697464943, -0.027886150404810905, 0.010736302472651005, 0.027200425043702126, 0.06007488816976547, 0.0003012652159668505, 0.018407011404633522, -0.0016437232261523604, 0.019092734903097153, 0.030171900987625122, -0.015327971428632736, -0.0027076054830104113, 0.0069648148491978645, 0.0161884892731905, -0.012470783665776253, 0.027523119002580643, -0.018003642559051514, 0.034071121364831924, 0.0026622265577316284, 0.03649132698774338, -0.014601909555494785, 0.018501129001379013, 0.016255715861916542, 0.024040712043642998, -0.01376828271895647, -0.02391970157623291, 0.00011071602057199925, -0.007979956455528736, -0.007529529742896557, 0.019469212740659714, 0.002151294145733118, -0.009640486910939217, -0.027913041412830353, 0.017116233706474304, -0.006971537601202726, 0.011785058304667473, 0.018286000937223434, 0.0074891927652060986, 0.016551518812775612, 0.000503369199577719, -0.07513394951820374, 0.0070185973308980465, 0.024820556864142418, -0.019845688715577126, -0.007953065447509289, -0.0015773356426507235, 0.03068283386528492, 0.006467327941209078, 0.004252167418599129, -0.0004002163477707654, -0.011576651595532894, -0.022897837683558464, -0.00512276915833354, -0.02273648977279663, -0.029096253216266632, 0.011973296292126179, -0.023462552577257156, -0.036222416907548904, -0.059644632041454315, 0.023691127076745033, -0.023839028552174568, -0.016941441223025322, 0.0041412413120269775, 0.009943013079464436, 0.014964940026402473, -0.001889105187729001, -0.006420268677175045, 0.03579215705394745, -0.023839028552174568, 0.0120001882314682, -0.019832242280244827, -0.018944833427667618, -0.018662476912140846, 0.016645638272166252, -0.025237370282411575, 0.004998397547751665, 0.004574861377477646, 0.04888816177845001, -0.0008832070743665099, -0.04439733177423477, -0.014467453584074974, -0.011401859112083912, -0.0013731307117268443, -0.040040962398052216, 0.012470783665776253, -0.041896454989910126, -0.017479265108704567, 0.020800326019525528, 0.002079024212434888, 0.001503384904935956, 0.001756330020725727, -0.003253832459449768, 0.007946343161165714, -0.015206960961222649, 0.011146392673254013, -0.022091101855039597, -0.026151670143008232, 0.015206960961222649, 0.020356621593236923, 0.022117992863059044, 0.039556920528411865, -0.0025294513907283545, 0.01474981103092432, 0.01961711421608925, -0.021499495953321457, -0.016336390748620033, 0.047301579266786575, -0.01113294716924429, -0.02098856307566166, -0.0019210384925827384, -0.03165091574192047, -0.009324515238404274, 0.042595624923706055, -0.0005659752641804516, -0.0024773497134447098, -0.00871946383267641, 0.015946468338370323, 0.00698498310521245, -0.01891794241964817, -0.06781955063343048, 0.024551644921302795, 0.025264261290431023, 0.026245789602398872, -0.008598454296588898, 0.006171524990350008, -0.0225482527166605, 0.009553090669214725, 0.001241195946931839, -0.02410794049501419, 0.03883086144924164, 0.004944615066051483, -0.009492585435509682, 0.022575143724679947, -0.0027748332358896732, -0.00737490551546216, 0.00897493027150631, -0.024739883840084076, 0.026810504496097565, -0.008887534029781818, -0.0156775563955307, -0.054642871022224426, 0.0279399324208498, 0.011065719649195671, -0.007105993572622538, 0.01637672632932663, 0.023691127076745033, 0.017412036657333374, -0.0032050921581685543, -0.02187597192823887, 0.0022588588763028383, 0.020316284149885178, 0.0038622452411800623, -0.04135863110423088, -0.01279347762465477, -0.009734606370329857, -0.0021798659581691027, -0.018084317445755005, -0.028370192274451256, 0.0392342284321785, 0.004591668490320444, -0.011018659919500351, 0.0006231190054677427, -0.0186355859041214, 0.029822316020727158, -0.027671020478010178, -0.047973860055208206, -0.011885900050401688, 0.017008669674396515, 0.0007840459002181888, -0.0012277503265067935, 0.05727820843458176, 0.0058824447914958, 0.02264237031340599, -0.006578254047781229, -0.012732972390949726, -0.016242271289229393, 0.0269584059715271, 0.02245413325726986, -0.02527770586311817, 0.0005437060026451945, -0.006796745117753744, 0.06357074528932571, -0.023691127076745033, -0.023677680641412735, -0.04851168394088745, -0.003277362324297428, -0.004672341980040073, 0.007341291289776564, 0.011193452402949333, 0.02312641218304634, 0.02401382103562355, 0.015193515457212925, 0.017506156116724014, -0.02128436602652073, 0.03568459302186966, 0.01308255735784769, 0.0042925039306283, 0.0015722934622317553, -0.007025320082902908, 0.020356621593236923, -0.0052437796257436275, -0.027993716299533844, 0.015704447403550148, -0.00048320082714781165, 0.01755993813276291, -0.0035294669214636087, -0.005045457277446985, -0.01821877248585224, -0.017277581617236137, -0.032108064740896225, 0.002171462634578347, -0.008847197517752647, -0.004722762852907181, -0.017600275576114655, 0.047973860055208206, 0.0006554724532179534, 0.021244030445814133, 0.001500023528933525, 0.005405126605182886, 0.032188739627599716, -0.018111208453774452, 0.01000351831316948, -0.027388663962483406, 0.013102726079523563, 0.04340235888957977, -0.001617672387510538, 0.003606779035180807, -0.014884267002344131, -0.030118118971586227, -0.002316002734005451, -0.0006777417147532105, 0.041600652039051056, 0.023462552577257156, -0.016201933845877647, -0.03175847977399826, 0.0022168413270264864, 0.0018403648864477873, -0.017694395035505295, -0.015502763912081718, -0.03173159062862396, -0.024739883840084076, -0.027227316051721573, 0.010306043550372124, -0.045822564512491226, -0.009506030939519405, -0.020800326019525528, -0.007516084238886833, 0.004655534867197275, 0.009237118996679783, 0.04716712608933449, -0.01823221892118454, 0.03775521367788315, -0.043778836727142334, -0.00043824216118082404, 0.005643785931169987, 0.011422027833759785, -0.025129804387688637, 0.05862276628613472, -0.019146518781781197, -0.04060567915439606, 0.0017916247015818954, -0.03194671869277954, 0.02244068682193756, -0.0062690055929124355, -0.03737873584032059, -0.008047184906899929, -0.015233851969242096, -0.020154938101768494, -0.00910938624292612, 0.002558023203164339, 0.010406885296106339, 0.014077531173825264, -0.008968207985162735, -0.006931201089173555, 0.02233312278985977, -0.0022185221314430237, -0.016793539747595787, -0.001605907455086708, 0.034447599202394485, -0.011630434542894363, 0.032672781497240067, -0.06029001995921135, -0.0014420393854379654, 0.0028336578980088234, -0.0008533747168257833, -0.011778336018323898, 0.010998491197824478, -0.03939557448029518, 0.0037109823897480965, -0.01929442025721073, 0.0036504773888736963, 0.009593427181243896, 0.05227644741535187, 0.00843038409948349, -0.02018182910978794, 0.000994133180938661, -0.007731213234364986, 0.003492491552606225, 0.0032067729625850916, -0.014467453584074974, 0.01815154403448105, 0.009546367451548576, 0.007415242027491331, -0.03038703091442585, 0.0058824447914958, 0.013324578292667866, 0.01279347762465477, 0.023744909092783928, 0.014090976677834988, 0.00715977605432272, 0.03186604380607605, -0.01265229843556881, 0.0411703921854496, 0.018985170871019363, -0.02697185054421425, 0.010944709181785583, 0.019267529249191284, -0.041331738233566284, -0.01343214325606823, 0.0008533747168257833, -0.025062577798962593, 0.018877606838941574, 0.002211799379438162, 0.026689494028687477, -0.015301079489290714, -0.02753656543791294, -0.0011773293372243643, -0.028773559257388115, -0.0441284216940403, -0.04619904235005379, 0.0362761989235878, -0.009815279394388199, -0.0008441308746114373, 0.0006189172272570431, -0.024538198485970497, -0.011778336018323898, 0.020033927634358406, 0.04049811139702797, 0.0033613971900194883, 0.020033927634358406, 0.02236001379787922, -0.027388663962483406, 0.01792296953499317, 0.015529654920101166, 0.011932959780097008, -0.01587923988699913, -0.020060818642377853, -0.024228950962424278, -0.01940198428928852, -0.029660968109965324, -0.02451130747795105, 0.04792007803916931, -0.015462426468729973, 0.004474019631743431, 0.003953002858906984, 0.015274188481271267, -0.00039685494266450405, 0.015731338411569595, 0.021512942388653755, -0.009492585435509682, 0.0024454165250062943, 0.021835636347532272, -0.0009126193472184241, 0.03076350688934326, -0.013808619230985641, -0.017291026189923286, -0.026044104248285294, -0.00012521204189397395, 0.014171650633215904, -0.018877606838941574, -0.005687484052032232, -0.038077905774116516, -0.01686076819896698, -0.024672655388712883, 0.0006453882670029998, 0.02283060923218727, 0.004776545334607363, 0.04006785526871681, -0.004504272248595953, 0.016349835321307182, -0.04539230838418007, -0.005011843051761389, 0.00876652356237173, 0.00698498310521245, 0.005445463582873344, 0.01902550831437111, 0.010151419788599014, 0.008847197517752647, -0.00999679509550333, 0.014601909555494785, 0.07620959728956223, -0.02920381911098957, -0.032592106610536575, -0.03520055115222931, 0.007455579005181789, -0.0016596898203715682, 0.040551893413066864, 0.010433776304125786, -0.0548848919570446, -0.003413498867303133, 0.004783268086612225, -0.01579856686294079, -0.024349961429834366, -0.0161884892731905, 0.013667440973222256, -0.0027344964910298586, -0.004776545334607363, -0.008833752013742924, -0.011845563538372517, 0.02293817326426506, 0.024766774848103523, -0.007334568537771702, -0.018380120396614075, -0.03261899948120117, -0.022884391248226166, 0.0026857564225792885, 0.014090976677834988, 0.007879114709794521, 0.012443892657756805, 0.013916184194386005, 0.02167428843677044, -0.021338149905204773, 0.009828724898397923, 0.04205780103802681, -0.024336514994502068, 0.028800450265407562, 0.00847744382917881, -0.012464060448110104, -0.020652424544095993, 0.04232671111822128, -0.013586767017841339, 0.003973171580582857, 0.004437044262886047, -0.029580295085906982, -0.015812011435627937, -0.005388319492340088, 0.024659208953380585, 0.03958381339907646, -0.0022185221314430237, 0.008403493091464043, -0.034958530217409134, -0.019240636378526688, -0.0030958468560129404, -0.007287508808076382, 0.0024924760218709707, 0.004699232988059521, -0.007105993572622538, 0.006030346266925335, 0.021015454083681107, 0.01445400808006525, 0.03148956969380379, -0.0161884892731905, -0.020733097568154335, -0.018299445509910583, 0.003516021417453885, -0.04286453500390053, 0.012679190374910831, 0.01445400808006525, 0.03479718416929245, -0.02568107470870018, -0.03506609424948692, 0.008840474300086498, 0.019038952887058258, 0.0411972850561142, 0.021190248429775238, 0.016927996650338173, 0.0489957258105278, -0.011549760587513447, 0.01587923988699913, -0.025627292692661285, -0.01902550831437111, -0.03934179246425629, -0.012665744870901108, -0.02812817133963108, -0.005153021775186062, -0.046225935220718384, 0.016511183232069016, 0.030521485954523087, 0.016941441223025322, 0.0343400314450264, 0.0034034145064651966, 0.02460542693734169, -0.005963118746876717, 0.014480899088084698, -0.011690938845276833, -0.021338149905204773, -0.005183274392038584, 0.011872454546391964, 0.01833978295326233, 0.018366673961281776, 0.021055791527032852, -0.03027946501970291, 0.003784933127462864, 0.020961672067642212, 0.027119752019643784, -0.05614877864718437, -0.042004019021987915, 0.017869187518954277, 0.013257350772619247, 0.022696154192090034, 0.019738124683499336, -0.005959757138043642, -0.01743892766535282, -0.017223799601197243, 0.00017773387662600726, -0.006107658613473177, -0.02686428651213646, 0.002594998572021723, 0.024336514994502068, -0.019563332200050354, 0.003697536885738373, -7.363560871453956e-05, -0.013875847682356834, -0.014279214665293694, 0.016685975715517998, 0.021593615412712097, 0.01695488765835762, 0.015529654920101166, 0.02039695717394352, -0.008053907193243504, 0.03087107092142105, 0.015112841501832008, -0.007516084238886833, -0.0124976746737957, 0.00659170001745224, -0.017022114247083664, -0.0005542103317566216, 0.007314400281757116, -0.004726124461740255, -0.029176928102970123, -0.012033801525831223, -0.023865919560194016, 0.035146769136190414, 0.008779969066381454, 0.0026807142421603203, -0.0058622765354812145, 0.012827091850340366, -0.024094494059681892, -0.014763256534934044, 0.025640737265348434, 0.02331465110182762, -0.0034387093037366867, -0.00182019651401788, -0.012625407427549362, 0.030333247035741806, 0.018017088994383812, 0.012383387424051762, 0.016524627804756165, 0.005015204660594463, 0.018312891945242882, 0.01637672632932663, 0.00871946383267641, 0.016094369813799858, -0.017022114247083664, 0.007643816992640495, 0.02500879392027855, 0.037701431661844254, -0.01499183103442192, -0.001512628747150302, -0.01696833223104477, -0.016349835321307182, 0.016215380281209946, -0.014427116140723228, 0.0026353353168815374, -0.006430352572351694, -0.008020293898880482, 0.0010058981133624911, 0.006460605189204216, 0.029553404077887535, 0.008027016185224056, -0.032780345529317856, -0.01589268632233143, -0.024282732978463173, 0.0038219084963202477, -0.01940198428928852, 0.0022101185750216246, 0.014924603514373302, -0.008921148255467415, 0.011462364345788956, -0.014628800563514233, 0.021647397428750992, -0.02334154210984707, 0.006020262371748686, 0.005620256066322327, -0.004820243455469608, 0.04009474441409111, -0.016322944313287735, -0.03353329747915268, -0.03458205237984657, 0.01259179413318634, 0.03302236646413803, -0.01715657114982605, -0.025116359815001488, -0.0011874135816469789, -0.029580295085906982, 0.0035328282974660397, -0.006040430627763271, 0.032108064740896225, -0.025640737265348434, 0.03584593906998634, -0.029741641134023666, 0.01445400808006525, 0.006937923841178417, -0.0134724797680974, 0.04991002380847931, -0.03216184675693512, -0.02301884815096855, -0.004830327816307545, -0.001138673280365765, -0.0033378673251718283, 0.02569451928138733, -0.020921336486935616, 0.006487496662884951, -0.010783362202346325, -0.02675672061741352, 0.04108971729874611, 0.00945224892348051, -0.012504396960139275, 0.005186636000871658, -0.0026571843773126602, -0.015610327944159508, 0.006420268677175045, -0.016793539747595787, -0.03079039789736271, -0.01147580984979868, 0.02931138314306736, -0.021203693002462387, -0.036410652101039886, -0.016228824853897095, 0.015166623517870903, 0.006400099955499172, 0.01725069060921669, -0.023946592584252357, 0.008813583292067051, -0.020450741052627563, -0.0016554880421608686, -0.005058902781456709, 0.015946468338370323, 0.03506609424948692, 0.006658927537500858, 0.03390977531671524, -0.007025320082902908, -0.02205076441168785, -0.024054158478975296, 0.0017075897194445133, 0.00021124280465301126, -0.015516209416091442, -0.02000703662633896, -0.020746544003486633, 0.016941441223025322, -0.01131446287035942, 0.023852474987506866, 0.03283412754535675, 0.006316065322607756, 0.02812817133963108, 0.012134643271565437, -0.0038487997371703386, -0.01278675440698862, 0.008114412426948547, -0.00421183044090867, -0.029365165159106255, 0.006500942166894674, -0.03079039789736271, 0.0018386841984465718, 0.03904598951339722, -0.004198384936898947, -0.010568232275545597, 0.003381565446034074, 0.031005527824163437, 0.023072630167007446, -0.04243427887558937, 0.009492585435509682, 0.035738375037908554, 0.0037277895025908947, 0.01735825464129448, 0.0005037894006818533, -0.03291480243206024, -0.004974867682904005, -0.023664236068725586, 0.0003836194518953562, 0.0030924854800105095, 0.03493163734674454, -0.012430446222424507, 0.01485737506300211, -0.010447222739458084, 0.013001884333789349, -0.006426991429179907, -0.040336765348911285, 4.8950347263598815e-05, 0.004510995000600815, 0.02920381911098957, -0.005845469422638416, -0.018191881477832794, -0.010890927165746689, -0.00922367349267006, 0.04264940693974495, -0.0061278268694877625, -0.024336514994502068, -0.036222416907548904, 0.0026252511888742447, 0.006904309615492821, -0.0013302728766575456, 0.03076350688934326, 0.0235432256013155, -0.04638728126883507, -0.009136277250945568, -0.021015454083681107, 0.03315681964159012, 0.0018941472517326474, 0.014306106604635715, -0.03657200187444687, -0.01832633651793003, 0.012578347697854042, -0.037593863904476166, -0.024094494059681892, 0.0018756595673039556, -0.017519602552056313, -0.021633952856063843, -0.008827028796076775, 0.030225683003664017, 0.012820368632674217, 0.010178310796618462, 0.04138552024960518, -0.024242395535111427, -0.0027193701826035976, -0.03953003138303757, 0.0038185471203178167, -0.009304347448050976, -0.004574861377477646, 0.010164865292608738, -0.0030050890054553747, 0.005912697408348322, -0.009472416713833809, 0.03751319274306297, 0.02675672061741352, 0.011825394816696644, 0.011482533067464828, -0.007206835318356752, -0.003953002858906984, -0.019576776772737503, -0.011805227026343346, -0.008450552821159363, -0.010326212272047997, 0.001211783615872264, 0.012659021653234959, 0.04711334407329559, 0.01317667681723833, -0.02617856115102768, -0.005677399691194296, -0.014736365526914597, -0.000638665456790477, 0.00032185378950089216, 0.005687484052032232, -0.012363218702375889, -0.011401859112083912, 0.007879114709794521, -0.0036168633960187435, -0.007516084238886833, 0.03380221128463745, 0.02197009138762951, -0.029634077101945877, -0.01038671750575304, -0.02597687765955925, -0.005317730363458395, -0.057708464562892914, -0.03716360777616501, -0.015596882440149784, 0.018164990469813347, 0.01308928057551384, -0.020410403609275818, -0.02187597192823887, 0.006147995591163635, -0.050824325531721115, -0.005566473584622145, 0.022978510707616806, 0.03786277770996094, -0.04012163728475571, 0.007502638269215822, -0.01318340003490448 ], index=0, object='embedding')], model='text-embedding-3-small', object='list', usage=Usage(prompt_tokens=14, total_tokens=14)) |

如果要直接打印嵌入数据,可以使用

|

1 |

print(response.data[0].embedding) |

打印嵌入数据的长度

|

1 2 3 |

print(len(response.data[0].embedding)) 1536 |

为啥 Openai 的嵌入返回的是1536个数据?

ChatGPT 的回答:

OpenAI的嵌入模型(如GPT-3或其它基于Transformer的模型)返回1536个数据点(或称为特征、维度)是由于模型设计和训练过程中的选择。这个数字,1536,指的是嵌入向量的维度,是模型内部表示信息的一个固定大小。

在深度学习和自然语言处理(NLP)中,嵌入是一种将文本(如单词、句子或整个文档)转换为一组数字的技术,使得这些数字能够代表原始文本的某种意义。这些数字通常组织成一个向量。向量的每个维度都是一个特征,可以捕捉一些关于文本的语义信息。

选择1536个维度(或任何特定的维度数)是基于多种因素的考虑:

- 性能和精度:更高的维度通常能够捕获更丰富的语义信息,这可能会提高模型在特定任务上的性能。然而,维度的增加也意味着模型的计算成本和存储需求会增加。1536是经过研发和测试后,平衡了性能、计算复杂度和资源需求的结果。

- 模型架构:OpenAI的模型是基于Transformer架构设计的,这种架构包括了多个自注意力层和前馈网络,它们的大小(维度)会影响模型能够处理的信息类型和量。1536维可以视为设计决策的一部分,以优化模型对于各种自然语言处理任务的通用性和效率。

- 实验和评估:在开发这些模型时,会进行大量的实验来评估不同配置下模型的表现。包括嵌入维度在内的各种参数都会被调整和优化,以找到最佳的设置,既能保持高质量的输出,又能在计算和存储上尽可能高效。

综上所述,1536个数据点的选择反映了在模型设计和开发过程中对于效能、资源和任务广泛性的综合考量。这样的嵌入向量大小旨在为多种应用和任务提供均衡且强大的语义表示能力。

3. 嵌入模型

默认情况下,text-embedding-3-small 嵌入向量的长度为 1536 for 或 text-embedding-3-large 为 3072。您可以通过传入 dimensions 参数来减小嵌入的维度,而不会使嵌入失去其概念表示属性。我们将在嵌入用例部分更详细地介绍嵌入维度。

OpenAI 提供了两个强大的第三代嵌入模型(在模型 ID 中用表示 v3 )。有关更多详细信息,可以阅读嵌入 v3 公告博客文章。

使用量按输入令牌定价,以下是每美元文本页的定价示例(假设每页~800个令牌):

| 模型 | 每美元页数 | EVAL MTEB 评估性能 | 最大输入长度 |

| text-embedding-3-small | 62,500 | 62.3% | 8191 |

| text-embedding-3-large | 9,615 | 64.6% | 8191 |

| text-embedding-ada-002 | 12,500 | 61.0% | 8191 |

4. 使用案例

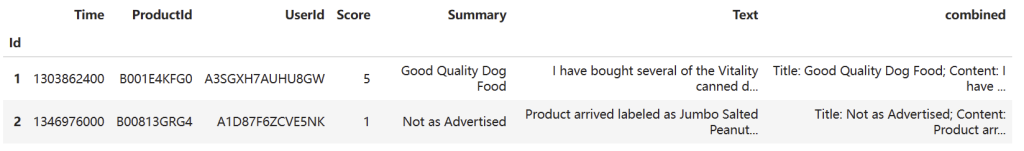

在这里,我们展示了一些具有代表性的用例。我们将使用亚马逊精品评论数据集进行以下示例。

4.1 获取嵌入

该数据集包含截至 2012 年 10 月亚马逊用户留下的 568,454 条食品评论。我们将使用 1,000 条最新评论的子集进行说明。评论是英文的,往往是正面或负面的。每条评论都有一个产品 ID、用户 ID、分数、评论标题(摘要)和评论正文(文本)。例如:

| 产品ID | 用户ID | 得分(SCORE) | 总结(SUMMARY) | 文本(TEXT) |

| B001E4KFG0 | A3SGXH7AUHU8GW | 5 | Good Quality Dog Food 优质狗粮 | I have bought several of the Vitality canned… 我买了几个活力罐头… |

| B00813GRG4 | A1D87F6ZCVE5NK | 1 | Not as Advertised 不像广告上所说的那样 | Product arrived labeled as Jumbo Salted Peanut… 产品到达时标有巨型盐渍花生… |

我们将评论摘要和评论文本合并为一个组合文本。该模型将对此组合文本进行编码并输出单个向量嵌入。

下载后,截取 1000 条记录,文件中不要有空行,保存为 data/fine_food_reviews_1k.csv

4.1.1 加载数据集

您需要安装:pandas、openai、transformers、plotly、matplotlib、scikit-learn、torch (transformer dep)、torchvision 和 scipy。

|

1 2 |

pip install pandas openai transformers plotly matplotlib scikit-learn pip install torch torchvision scipy tiktoken |

代码如下:

|

1 2 3 4 |

import pandas as pd import tiktoken from utils.embeddings_utils import get_embedding |

|

1 2 3 |

embedding_model = "text-embedding-3-small" embedding_encoding = "cl100k_base" max_tokens = 8000 # the maximum for text-embedding-3-small is 8191 |

|

1 2 3 4 5 6 7 8 9 |

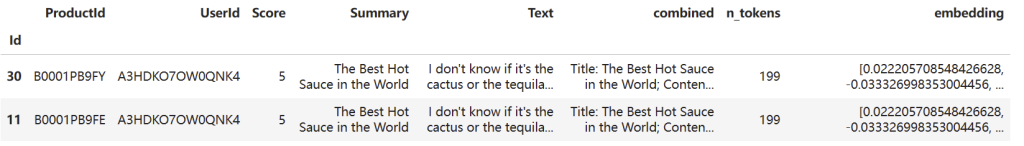

# load & inspect dataset input_datapath = "data/fine_food_reviews_1k.csv" # to save space, we provide a pre-filtered dataset df = pd.read_csv(input_datapath, index_col=0) df = df[["Time", "ProductId", "UserId", "Score", "Summary", "Text"]] df = df.dropna() df["combined"] = ( "Title: " + df.Summary.str.strip() + "; Content: " + df.Text.str.strip() ) df.head(2) |

输出结果:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

# subsample to 1k most recent reviews and remove samples that are too long top_n = 1000 import tiktoken df = df.sort_values("Time").tail(top_n * 2) # first cut to first 2k entries, assuming less than half will be filtered out df.drop("Time", axis=1, inplace=True) encoding = tiktoken.get_encoding(embedding_encoding) # omit reviews that are too long to embed df["n_tokens"] = df.combined.apply(lambda x: len(encoding.encode(x))) df = df[df.n_tokens <= max_tokens].tail(top_n) len(df) |

运行结果:

获取嵌入并保存它们以备将来重用

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import os from openai import OpenAI OPENAI_API_KEY = os.getenv('OPENAI_API_KEY') client = OpenAI( api_key=OPENAI_API_KEY ) def get_embedding(text: str, model="text-embedding-3-small", **kwargs) -> List[float]: # replace newlines, which can negatively affect performance. text = text.replace("\n", " ") response = client.embeddings.create(input=[text], model=model, **kwargs) return response.data[0].embedding # Ensure you have your API key set in your environment per the README: https://github.com/openai/openai-python#usage # This may take a few minutes df["embedding"] = df.combined.apply(lambda x: get_embedding(x, model=model='text-embedding-3-small')) df.to_csv("data/fine_food_reviews_with_embeddings_1k.csv") |

要从保存的文件加载数据,可以运行以下命令:

|

1 2 3 4 5 |

import pandas as pd import numpy as np df = pd.read_csv('data/fine_food_reviews_with_embeddings_1k.csv') df['ada_embedding'] = df.embedding.apply(eval).apply(np.array) |

5. 减小嵌入尺寸

与使用较小的嵌入相比,使用较大的嵌入(例如将它们存储在矢量存储中以供检索)通常成本更高,并且消耗更多的计算、内存和存储。

我们的两个新嵌入模型都使用一种技术进行训练,该技术允许开发人员权衡使用嵌入的性能和成本。具体来说,开发人员可以缩短嵌入(即从序列末尾删除一些数字),而不会通过传入 dimensions API 参数来使嵌入失去其概念表示属性。例如,在 MTEB 基准测试中, text-embedding-3-large 嵌入可以缩短到 256 的大小,同时仍然优于大小为 1536 的未缩短 text-embedding-ada-002 嵌入。您可以在我们的嵌入 v3 发布博客文章中详细了解更改维度如何影响性能。

通常,建议在创建嵌入时使用该 dimensions 参数。在某些情况下,您可能需要在生成嵌入维度后对其进行更改。手动更改尺寸时,需要确保规范化嵌入的尺寸,如下所示。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

from openai import OpenAI import numpy as np client = OpenAI() def normalize_l2(x): x = np.array(x) if x.ndim == 1: norm = np.linalg.norm(x) if norm == 0: return x return x / norm else: norm = np.linalg.norm(x, 2, axis=1, keepdims=True) return np.where(norm == 0, x, x / norm) response = client.embeddings.create( model="text-embedding-3-small", input="Testing 123", encoding_format="float" ) cut_dim = response.data[0].embedding[:256] norm_dim = normalize_l2(cut_dim) print(norm_dim) |

动态更改尺寸可实现非常灵活的使用。例如,当使用仅支持最多 1024 个维度的嵌入的向量数据存储时,开发人员现在仍然可以使用我们的最佳嵌入模型 text-embedding-3-large ,并为 dimensions API 参数指定值 1024,这将缩短从 3072 个维度向下的嵌入时间,从而牺牲一些准确性以换取较小的向量大小。

6. 使用嵌入的语义文本搜索

通过嵌入我们的搜索查询,然后找到最相似的评论,我们可以以非常有效的方式以非常低的成本在语义上搜索我们的所有评论。

|

1 2 3 4 5 6 7 8 |

import pandas as pd import numpy as np from ast import literal_eval datafile_path = "data/fine_food_reviews_with_embeddings_1k.csv" df = pd.read_csv(datafile_path) df["embedding"] = df.embedding.apply(literal_eval).apply(np.array) |

在这里,我们比较了查询和文档嵌入的余弦相似度,并显示了top_n最佳匹配。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

from utils.embeddings_utils import get_embedding, cosine_similarity # search through the reviews for a specific product def search_reviews(df, product_description, n=3, pprint=True): product_embedding = get_embedding( product_description, model="text-embedding-3-small" ) df["similarity"] = df.embedding.apply(lambda x: cosine_similarity(x, product_embedding)) results = ( df.sort_values("similarity", ascending=False) .head(n) .combined.str.replace("Title: ", "") .str.replace("; Content:", ": ") ) if pprint: for r in results: print(r[:200]) print() return results #美味的豆子 results = search_reviews(df, "delicious beans", n=3) |

运行结果

|

1 2 3 4 5 6 7 8 9 10 11 |

Delicious and additive: Love these chips, and I don't even like black beans. Very tasty. Yum!! Plus, good for you! Black Beans Never Tasted So Good!!!: These are the most fantastic chips I've ever had! I could eat the whole bag myself! They're made with lots of whole grain and beans, and make a complete protein Great Beans!!!: I ordered these for my coffee themed wedding. When they arrived I had to fight off friends because they smelled and tasted so good. I literally had to hide the box to the wedding! The 美味又上瘾:爱上了这些薯片,我甚至不喜欢黑豆。非常好吃。好棒!!而且,对你有好处! 黑豆从未如此美味!!!:这是我吃过的最棒的薯片!我可以自己吃掉整袋!它们由大量全谷物和豆子制成,构成了完整的蛋白质 超赞的豆子!!!:我为我的咖啡主题婚礼订购了这些豆子。当它们到达时,我不得不抵挡住朋友们,因为它们闻起来和尝起来都非常好。我真的不得不把盒子藏到婚礼那天! |

|

1 2 |

# 全麦意大利面 results = search_reviews(df, "whole wheat pasta", n=3) |

运行结果:

|

1 2 3 4 5 6 7 8 9 10 11 |

Annie's Homegrown Organic Whole Wheat Shells & White Cheddar Macaroni & Cheese - 6 oz.: This product is made by Annie's Inc. in Berkely, CA--made in the USA. The product maintains excellent organic Perfect for gluten-free chocolate chip cookies: We made chocolate chip cookies with BRM Garbanzo Bean Flour and the results were fantastic! The brown sugar and chocolate mask any bean taste that oth WOW: I tried to find israeli couscous in a number of upsacale grocery stores and no luck. So I decided to try good ole Amazon.com and selected this product. It is so good..add a few herbs and it's Annie's Homegrown有机全麦贝壳形意大利面和白车达奶酪通心粉 - 6盎司:这款产品由位于加利福尼亚州伯克利的Annie's Inc.生产--美国制造。该产品保持了卓越的有机品质 完美适合无麸质巧克力碎片饼干:我们用BRM鹰嘴豆面粉做了巧克力碎片饼干,结果棒极了!红糖和巧克力掩盖了其他豆类的味道 哇:我试图在许多高档杂货店中找到以色列库斯库斯,但没有运气。所以我决定尝试好老的Amazon.com并选择了这款产品。它非常好..加入一些草药,它就变得更好了 |

我们可以轻松搜索这些评论。为了加快计算速度,我们可以使用一种特殊的算法,旨在通过嵌入更快地进行搜索。

|

1 2 |

# 糟糕的送货服务 results = search_reviews(df, "bad delivery", n=1) |

运行结果:

|

1 2 3 |

disappointing: not what I was expecting in terms of the company's reputation for excellent home delivery products 令人失望:就公司在优质家庭送货产品方面的声誉而言,这并不是我所期待的 |

正如我们所看到的,这可以立即带来很多价值。在此示例中,我们展示了能够快速找到交付失败的示例。

|

1 2 |

# 变质的 results = search_reviews(df, "spoilt", n=1) |

运行结果:

|

1 2 3 |

burnt: These bags had a lot of overcooked brown pieces. also felt very greasy. Had to keep wiping my fingers on a napkin. 烧焦的:这些包装里有很多过度烹饪的棕色碎片。也感觉非常油腻。不得不一直用餐巾纸擦手指。 |

|

1 2 |

# 宠物食品 results = search_reviews(df, "pet food", n=2) |

运行结果:

|

1 2 3 4 5 6 7 |

Palatable and healthy: Before I was educated about feline nutrition, I allowed my cats to become addicted to dry cat food. I always offered both canned and dry, but wish I would have fed them premium My cats LOVE this "diet" food better than their regular food: One of my boys needed to lose some weight and the other didn't. I put this food on the floor for the chubby guy, and the protein-rich, n 美味且健康:在我了解到猫咪营养知识之前,我让我的猫咪沉迷于干猫粮。我总是提供罐装和干猫粮两种,但希望我当时就给它们喂食了高品质的 我的猫比喜欢它们常规食物更爱这种“减肥”食物:我的一个小家伙需要减肥,另一个则不需要。我把这种食物放在地上给胖乎乎的那个,而这种富含蛋白质的,nutrition-packed的食物 |

7. 带嵌入的零样本分类

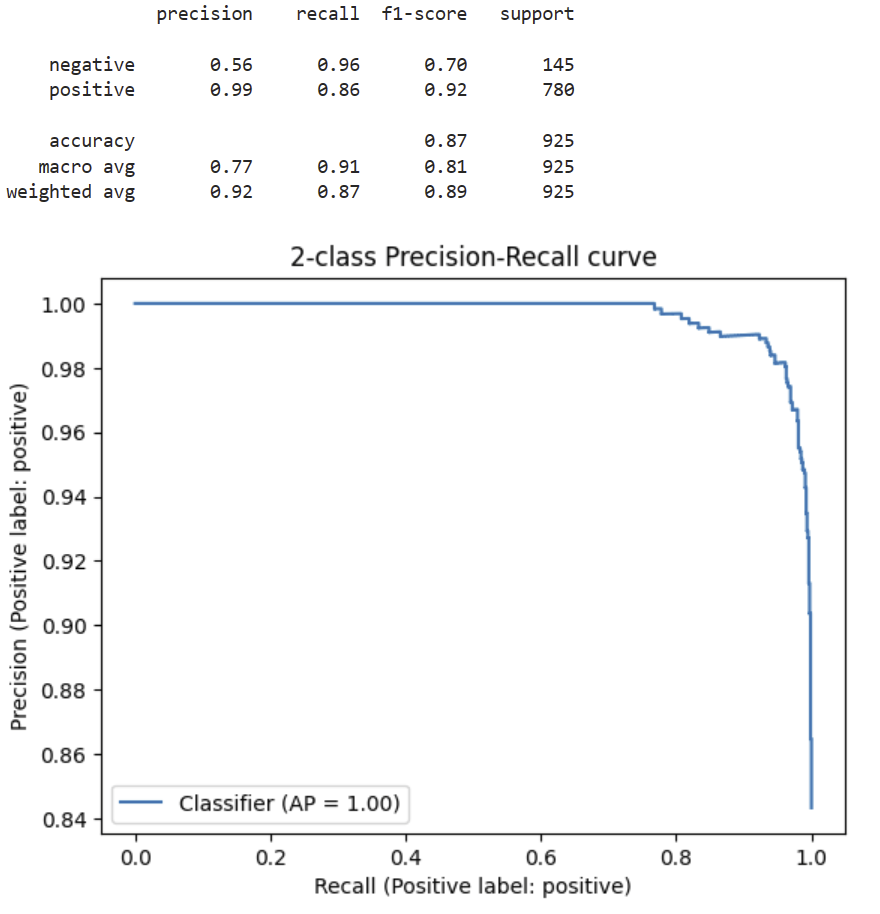

在此笔记本中,我们将使用嵌入和零标记数据对评论的情绪进行分类!

我们将正面情绪定义为 4 星和 5 星评论,将负面情绪定义为 1 星和 2 星评论。3 星评价被视为中立,我们不会在此示例中使用它们。

我们将通过嵌入每个类的描述,然后将新样本与这些类嵌入进行比较来执行零样本分类。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

import pandas as pd import numpy as np from ast import literal_eval from sklearn.metrics import classification_report EMBEDDING_MODEL = "text-embedding-3-small" datafile_path = "data/fine_food_reviews_with_embeddings_1k.csv" df = pd.read_csv(datafile_path) df["embedding"] = df.embedding.apply(literal_eval).apply(np.array) # convert 5-star rating to binary sentiment df = df[df.Score != 3] df["sentiment"] = df.Score.replace({1: "negative", 2: "negative", 4: "positive", 5: "positive"}) |

7.1 零样本分类

为了执行零样本分类,我们希望在没有任何训练的情况下预测样品的标签。为此,我们可以简单地嵌入每个标签的简短描述,例如正和负,然后比较样本嵌入和标签描述之间的余弦距离。

与样本输入相似度最高的标签是预测标签。我们还可以将预测分数定义为到正标签和负标签的余弦距离之间的差值。该分数可用于绘制精确召回率曲线,该曲线可用于通过选择不同的阈值在精确率和召回率之间选择不同的权衡。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

from embeddings_utils import cosine_similarity, get_embedding from sklearn.metrics import PrecisionRecallDisplay def evaluate_embeddings_approach( labels = ['negative', 'positive'], model = EMBEDDING_MODEL, ): label_embeddings = [get_embedding(label, model=model) for label in labels] def label_score(review_embedding, label_embeddings): return cosine_similarity(review_embedding, label_embeddings[1]) - cosine_similarity(review_embedding, label_embeddings[0]) probas = df["embedding"].apply(lambda x: label_score(x, label_embeddings)) preds = probas.apply(lambda x: 'positive' if x>0 else 'negative') report = classification_report(df.sentiment, preds) print(report) display = PrecisionRecallDisplay.from_predictions(df.sentiment, probas, pos_label='positive') _ = display.ax_.set_title("2-class Precision-Recall curve") evaluate_embeddings_approach(labels=['negative', 'positive'], model=EMBEDDING_MODEL) |

运行结果:

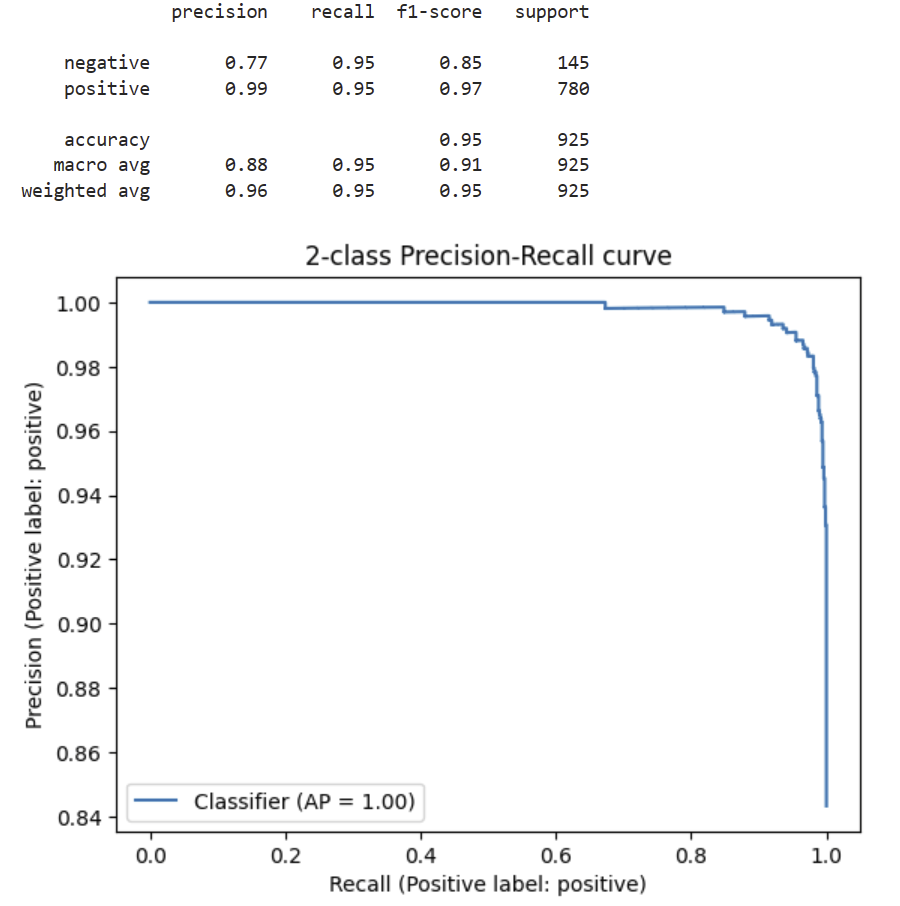

我们可以看到,这个分类器已经表现得非常好了。我们使用了相似性嵌入和最简单的标签名称。让我们尝试通过使用更具描述性的标签名称和搜索嵌入来改进这一点。

|

1 |

evaluate_embeddings_approach(labels=['An Amazon review with a negative sentiment.', 'An Amazon review with a positive sentiment.']) |

运行结果:

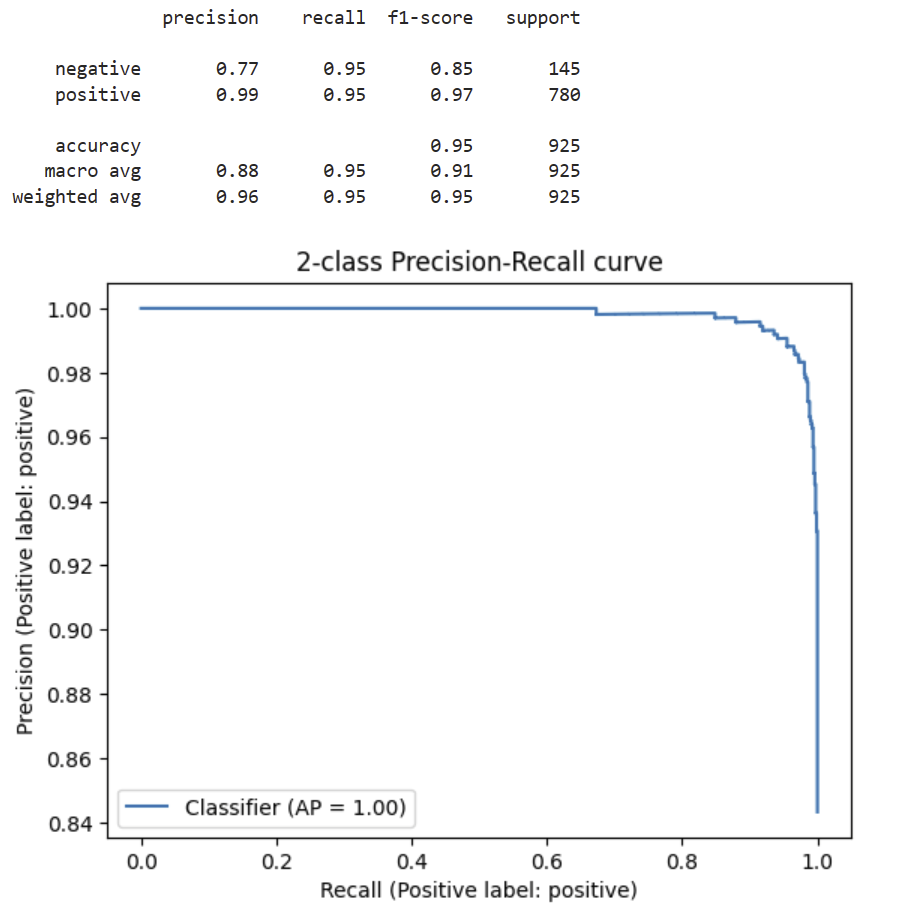

使用搜索嵌入和描述性名称可以进一步提高性能。

|

1 |

evaluate_embeddings_approach(labels=['An Amazon review with a negative sentiment.', 'An Amazon review with a positive sentiment.']) |

运行结果:

如上所示,带有嵌入的零样本分类可以产生很好的结果,尤其是当标签比简单的单词更具描述性时。

8. 用户和产品嵌入

我们根据训练集计算用户和产品嵌入,并在看不见的测试集上评估结果。我们将通过绘制用户和产品相似性与评论分数来评估结果。

8.1. 计算用户和产品嵌入

我们只需对训练集中关于同一产品或同一用户撰写的所有评论进行平均来计算这些嵌入。

|

1 2 3 4 5 6 7 |

import pandas as pd import numpy as np from sklearn.model_selection import train_test_split from ast import literal_eval df = pd.read_csv('data/fine_food_reviews_with_embeddings_1k.csv', index_col=0) # note that you will need to generate this file to run the code below df.head(2) |

运行结果:

|

1 2 3 4 5 6 |

df['babbage_similarity'] = df["embedding"].apply(literal_eval).apply(np.array) X_train, X_test, y_train, y_test = train_test_split(df, df.Score, test_size = 0.2, random_state=42) user_embeddings = X_train.groupby('UserId').babbage_similarity.apply(np.mean) prod_embeddings = X_train.groupby('ProductId').babbage_similarity.apply(np.mean) len(user_embeddings), len(prod_embeddings) |

运行结果:

|

1 |

(775, 189) |

我们可以看到,大多数用户和产品在 50k 示例中只出现过一次。

8.2. 评估嵌入

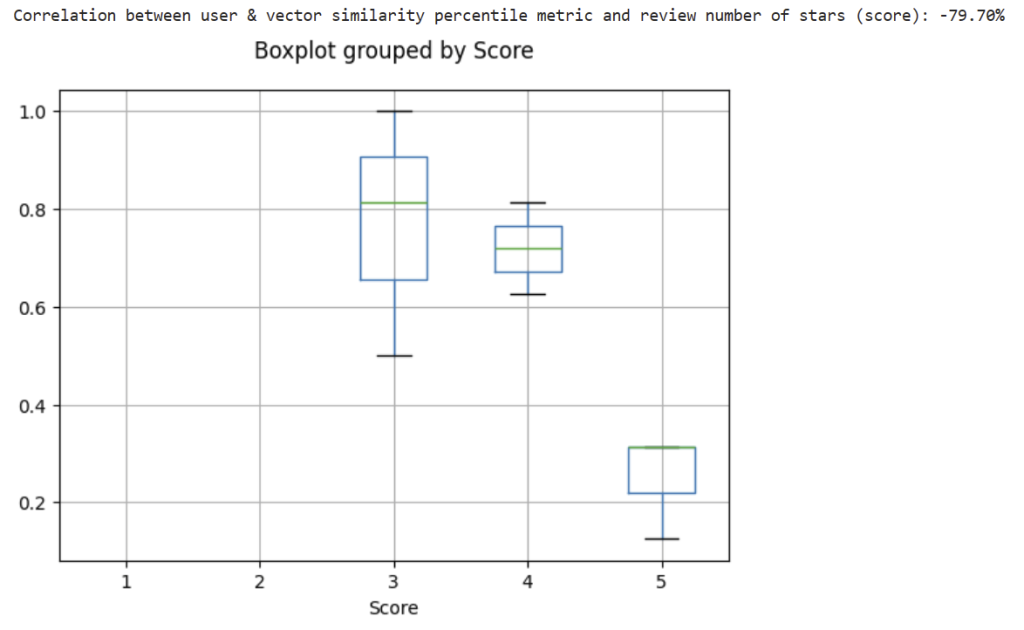

为了评估这些建议,我们查看了用户和产品嵌入在看不见的测试集中的评论中的相似性。我们计算用户和产品嵌入之间的余弦距离,这给了我们一个介于 0 和 1 之间的相似性分数。然后,我们通过计算所有预测分数中相似性分数的百分位数,将分数归一化为在 0 和 1 之间平均分配。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

from embeddings_utils import cosine_similarity # evaluate embeddings as recommendations on X_test def evaluate_single_match(row): user_id = row.UserId product_id = row.ProductId try: user_embedding = user_embeddings[user_id] product_embedding = prod_embeddings[product_id] similarity = cosine_similarity(user_embedding, product_embedding) return similarity except Exception as e: return np.nan X_test['cosine_similarity'] = X_test.apply(evaluate_single_match, axis=1) X_test['percentile_cosine_similarity'] = X_test.cosine_similarity.rank(pct=True) |

通8.2.1 过评分可视化余弦(cosine)相似度

我们将余弦相似度分数按评论分数分组,并绘制每个评论分数的余弦相似性分数分布图。

|

1 |

!pip install statsmodels |

|

1 2 3 4 5 6 7 8 9 10 11 12 |

import matplotlib.pyplot as plt import statsmodels.api as sm correlation = X_test[['percentile_cosine_similarity', 'Score']].corr().values[0,1] print('Correlation between user & vector similarity percentile metric and review number of stars (score): %.2f%%' % (100*correlation)) # boxplot of cosine similarity for each score X_test.boxplot(column='percentile_cosine_similarity', by='Score') plt.title('') plt.show() plt.close() |

运行结果:

我们可以观察到一个疲软的趋势,表明用户和产品嵌入之间的相似度得分越高,评论得分就越高。因此,用户和产品嵌入可以弱预测评论分数 – 甚至在用户收到产品之前!

由于该信号的工作方式与更常用的协同滤波不同,因此它可以作为附加功能,略微提高现有问题的性能。

9. 聚类(Clustering)

我们使用一个简单的 k 均值算法来演示如何进行聚类。聚类分析可以帮助发现数据中有价值的隐藏分组

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# imports import numpy as np import pandas as pd from ast import literal_eval # load data datafile_path = "./data/fine_food_reviews_with_embeddings_1k.csv" df = pd.read_csv(datafile_path) df["embedding"] = df.embedding.apply(literal_eval).apply(np.array) # convert string to numpy array matrix = np.vstack(df.embedding.values) matrix.shape |

运行结果:

|

1 |

(1000, 1536) |

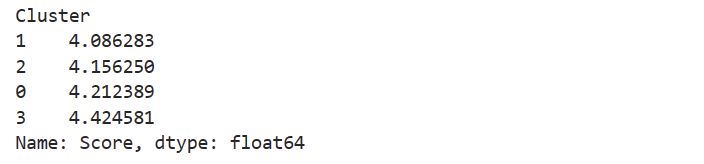

9.1 使用 K-means 查找聚类

我们展示了 K 均值的最简单用法。您可以选择最适合您的用例的集群数量。

|

1 2 3 4 5 6 7 8 9 10 |

from sklearn.cluster import KMeans n_clusters = 4 kmeans = KMeans(n_clusters=n_clusters, init="k-means++", random_state=42) kmeans.fit(matrix) labels = kmeans.labels_ df["Cluster"] = labels df.groupby("Cluster").Score.mean().sort_values() |

运行结果:

|

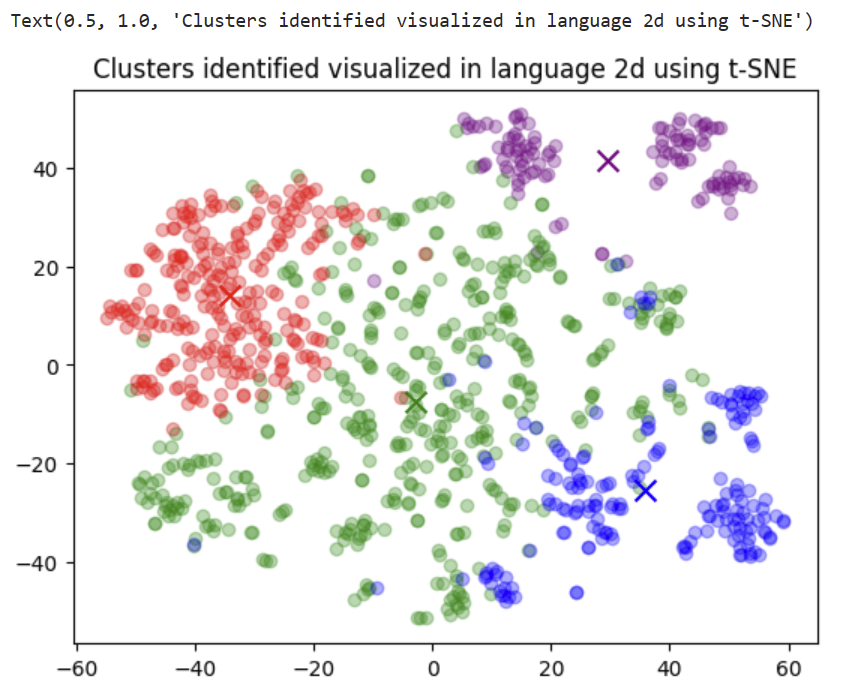

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from sklearn.manifold import TSNE import matplotlib import matplotlib.pyplot as plt tsne = TSNE(n_components=2, perplexity=15, random_state=42, init="random", learning_rate=200) vis_dims2 = tsne.fit_transform(matrix) x = [x for x, y in vis_dims2] y = [y for x, y in vis_dims2] for category, color in enumerate(["purple", "green", "red", "blue"]): xs = np.array(x)[df.Cluster == category] ys = np.array(y)[df.Cluster == category] plt.scatter(xs, ys, color=color, alpha=0.3) avg_x = xs.mean() avg_y = ys.mean() plt.scatter(avg_x, avg_y, marker="x", color=color, s=100) plt.title("Clusters identified visualized in language 2d using t-SNE") |

运行结果:

二维投影中聚类的可视化。在这次运行中,绿色集群 (#1) 似乎与其他集群完全不同。让我们看看每个集群中的一些示例。

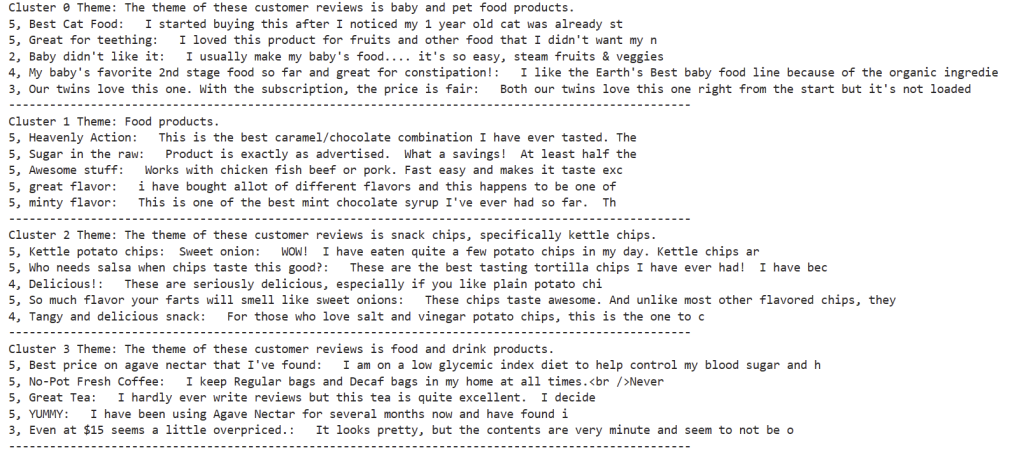

9.2 集群中的文本样本和集群命名

让我们展示每个聚类的随机样本。我们将使用 gpt-4 根据该集群的 5 条评论的随机样本来命名集群。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

from openai import OpenAI import os #OPENAI_API_KEY", "<your OpenAI API key if not set as env var>") client = OpenAI(api_key=os.environ.get() # Reading a review which belong to each group. rev_per_cluster = 5 for i in range(n_clusters): print(f"Cluster {i} Theme:", end=" ") reviews = "\n".join( df[df.Cluster == i] .combined.str.replace("Title: ", "") .str.replace("\n\nContent: ", ": ") .sample(rev_per_cluster, random_state=42) .values ) messages = [ {"role": "user", "content": f'What do the following customer reviews have in common?\n\nCustomer reviews:\n"""\n{reviews}\n"""\n\nTheme:'} ] response = client.chat.completions.create( model="gpt-4", messages=messages, temperature=0, max_tokens=64, top_p=1, frequency_penalty=0, presence_penalty=0) print(response.choices[0].message.content.replace("\n", "")) sample_cluster_rows = df[df.Cluster == i].sample(rev_per_cluster, random_state=42) for j in range(rev_per_cluster): print(sample_cluster_rows.Score.values[j], end=", ") print(sample_cluster_rows.Summary.values[j], end=": ") print(sample_cluster_rows.Text.str[:70].values[j]) print("-" * 100) |

运行结果:

请务必注意,群集不一定与您打算使用它们的目的相匹配。较大的聚类将专注于更具体的模式,而少量聚类通常将专注于数据中最大的差异。

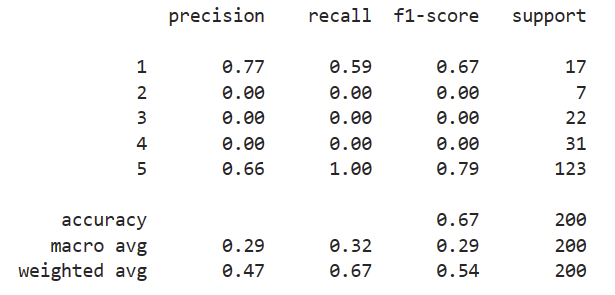

10. 使用嵌入进行分类

有很多方法可以对文本进行分类。此笔记本分享了一个使用嵌入进行文本分类的示例。对于许多文本分类任务,我们已经看到微调模型比嵌入模型做得更好。请参阅 Fine-tuned_classification.ipynb 中用于分类的微调模型示例。我们还建议使用比嵌入维度更多的示例,我们在这里没有完全实现。

在这个文本分类任务中,我们根据评论文本的嵌入来预测食品评论的分数(1 到 5)。我们将数据集拆分为以下所有任务的训练集和测试集,因此我们可以真实地评估看不见的数据的性能。数据集是在 Get_embeddings_from_dataset Notebook 中创建的。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

import pandas as pd import numpy as np from ast import literal_eval from sklearn.ensemble import RandomForestClassifier from sklearn.model_selection import train_test_split from sklearn.metrics import classification_report, accuracy_score datafile_path = "data/fine_food_reviews_with_embeddings_1k.csv" df = pd.read_csv(datafile_path) df["embedding"] = df.embedding.apply(literal_eval).apply(np.array) # convert string to array # split data into train and test X_train, X_test, y_train, y_test = train_test_split( list(df.embedding.values), df.Score, test_size=0.2, random_state=42 ) # train random forest classifier clf = RandomForestClassifier(n_estimators=100) clf.fit(X_train, y_train) preds = clf.predict(X_test) probas = clf.predict_proba(X_test) report = classification_report(y_test, preds) print(report) |

运行结果:

我们可以看到,该模型已经学会了体面地区分类别。5 星评论总体上表现最佳,这并不奇怪,因为它们是数据集中最常见的。

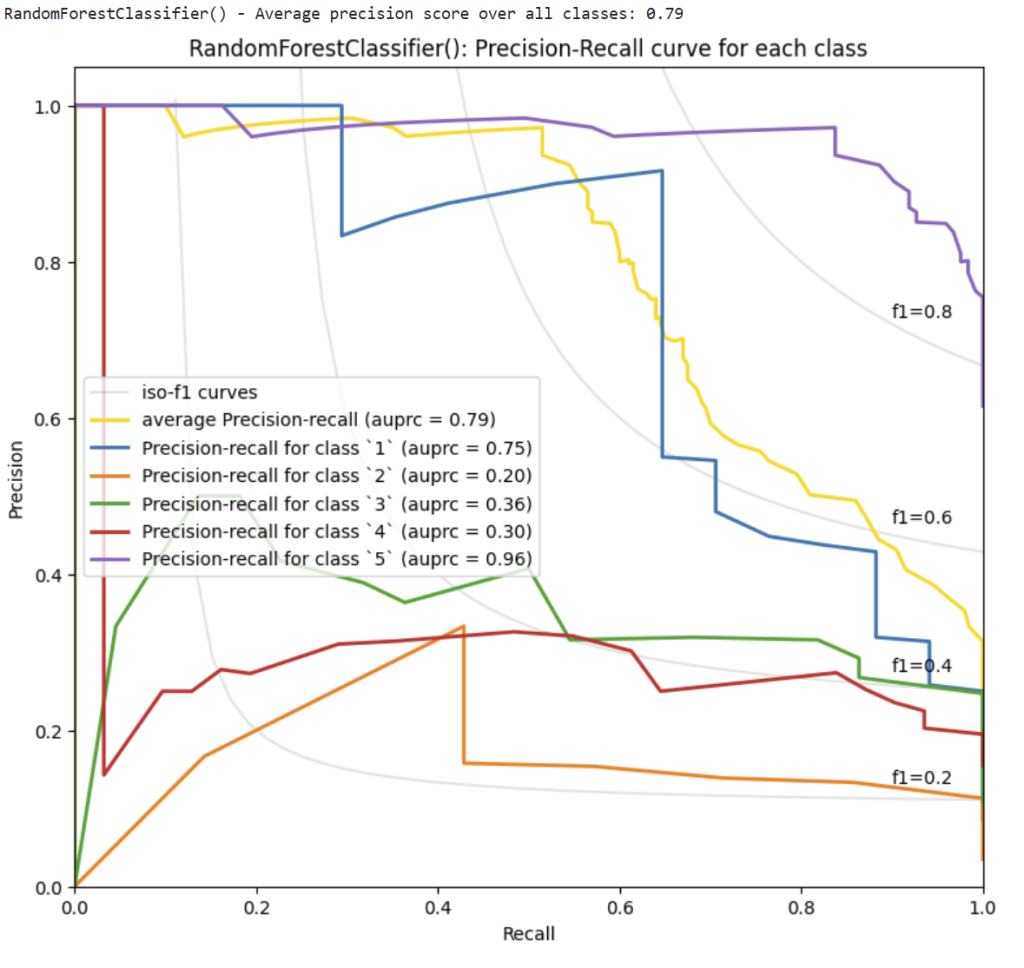

|

1 2 3 |

from embeddings_utils import plot_multiclass_precision_recall plot_multiclass_precision_recall(probas, y_test, [1, 2, 3, 4, 5], clf) |

运行结果:

不出所料,5 星和 1 星评论似乎更容易预测。也许有了更多的数据,可以更好地预测 2-4 颗星之间的细微差别,但人们如何使用中间分数也可能有更多的主观性。

11. 使用嵌入的回归

回归意味着预测一个数字,而不是其中一个类别。我们将根据评论文本的嵌入来预测分数。我们将数据集拆分为以下所有任务的训练集和测试集,因此我们可以真实地评估看不见的数据的性能。数据集是在 Get_embeddings_from_dataset Notebook 中创建的。

我们预测的是评论的分数,即 1 到 5 之间的数字(1 星为负面,5 星为正面)。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

import pandas as pd import numpy as np from ast import literal_eval from sklearn.ensemble import RandomForestRegressor from sklearn.model_selection import train_test_split from sklearn.metrics import mean_squared_error, mean_absolute_error datafile_path = "data/fine_food_reviews_with_embeddings_1k.csv" df = pd.read_csv(datafile_path) df["embedding"] = df.embedding.apply(literal_eval).apply(np.array) X_train, X_test, y_train, y_test = train_test_split(list(df.embedding.values), df.Score, test_size=0.2, random_state=42) rfr = RandomForestRegressor(n_estimators=100) rfr.fit(X_train, y_train) preds = rfr.predict(X_test) mse = mean_squared_error(y_test, preds) mae = mean_absolute_error(y_test, preds) print(f"text-embedding-3-small performance on 1k Amazon reviews: mse={mse:.2f}, mae={mae:.2f}") |

运行结果:

|

1 |

text-embedding-3-small performance on 1k Amazon reviews: mse=0.53, mae=0.54 |

|

1 2 3 4 5 |

bmse = mean_squared_error(y_test, np.repeat(y_test.mean(), len(y_test))) bmae = mean_absolute_error(y_test, np.repeat(y_test.mean(), len(y_test))) print( f"Dummy mean prediction performance on Amazon reviews: mse={bmse:.2f}, mae={bmae:.2f}" ) |

运行结果:

|

1 |

Dummy mean prediction performance on Amazon reviews: mse=1.60, mae=1.01 |

我们可以看到,嵌入能够预测分数,每个分数预测的平均误差为 0.53。这大致相当于完美地预测了一半的评论,并减少了一半的一星。

您还可以训练分类器来预测标签,或使用现有 ML 模型中的嵌入来对自由文本要素进行编码。